AI Therapy: The Future of Mental Health or a Dangerous Shortcut

- Discovery Journal

- Dec 17, 2025

- 5 min read

Artificial intelligence used to be the kind of thing you only saw in movies or read about in futuristic tech articles. Now it is part of everyday life. It can write your emails, plan your meals, recommend your next purchase and even talk you through your feelings. Some people are turning to digital chat tools when they feel anxious, lonely or overwhelmed.

On the surface, this sounds incredible! A tool that is always awake, always available and always ready to respond. For people who are struggling with their mental health, the promise of instant comfort is powerful.

But here is where things get complicated.

Not all support is real support. And not all guidance is safe guidance. Recently, more mental health professionals have been raising concerns about the risks of leaning on AI for emotional or psychological care. And the truth is that the more you look into it, the more you see that AI-based emotional support is not as harmless as it might seem.

Why People Are Turning to AI Therapy for Emotional Support

There are very understandable reasons why people are using AI when they feel distressed.

People are lonely

Waiting lists for therapy can feel endless

Mental health services are stretched

It feels less scary to talk to a digital tool

It responds instantly with no judgment

This is especially true for young people who grew up with digital communication as their primary form of connection. The screen feels like a safe space.

But emotional safety and emotional accuracy are two very different things.

The Big Problem

AI Does Not Actually Understand You

AI can mimic understanding, but it cannot actually feel empathy. It does not truly know your situation. It does not remember your life. It cannot sense your tone or read body language, or catch the subtle signs that trained therapists notice.

If a therapist sees you struggling to maintain eye contact or sees your breathing change, they adjust their approach. AI cannot do that.

AI is good at predicting patterns of language. Not patterns of humans.

So when someone is vulnerable, scared or overwhelmed, the advice given by AI might feel soothing, but it may not be clinically safe.

There have already been concerns about situations where AI chat tools offered advice that was misguided, insensitive or even harmful. And while these tools continue to improve, they still lack the one thing that humans need most when they are struggling. Real connection.

The Dangerous Shortcut

Therapy is not meant to be fast. Healing is not meant to be instant. Emotional change is not a switch that flips just because an answer appears on a screen.

The idea that you can type a few words into a chat box and receive something that replaces human support is tempting but misleading.

Here is why:

AI can soothe in the moment, but it does not help you build long-term coping skills

AI cannot see the bigger picture of your mental health

AI can offer advice that sounds right but does not apply to your real-life circumstances

AI might miss signs of severe distress

AI can encourage emotional dependence

This last point is important. People are starting to form emotional attachments to AI tools. They check in daily. They rely on them for validation, comfort and reassurance.

It becomes a loop. Feel sad? Talk to AI, receive comforting words. Repeat

But comfort is not the same as healing.

This loop can prevent people from doing the deeper internal work that actually helps long-term.

The Rise of Digital Loneliness

One of the most ironic things about AI-based emotional support is that it can increase loneliness even as it tries to reduce it.

When you turn to a screen for comfort, you stop turning to people. You stop building your support system. You stop having real conversations. And you slowly feel more alone.

Digital reassurance is quick but shallow. Human connection is slower but deeper.

We are social beings. We need real voices, real faces and real relationships. AI cannot offer that emotional nourishment. And when people rely on AI to fill those gaps, they often feel emptier than before.

So Should We Avoid AI Completely

Not at all. AI tools can be incredibly useful when used wisely. They can offer reminders, breathing exercises, information about coping strategies, encouragement to seek professional support and a sense of momentary comfort

The problem is not the tool. The problem is when people begin using it as a substitute for real support rather than an addition.

Think of AI as a bandage. Helpful for a moment. But not a cure.

The Better Alternative

Reflection That Builds Real Inner Strength

If you want something that actually helps over time, you need tools that grow your self-awareness, your emotional resilience and your capacity to understand yourself.

This is where reflective writing is powerful. Journaling gives you something AI never can:

A deeper understanding of your own thoughts.

A record of your emotional patterns.

Insight into your needs and behaviours.

A real connection with yourself.

When you write regularly, you start to see your own story more clearly. You see what helps, what hurts and what keeps repeating. You begin to strengthen the part of you that knows how to support yourself.

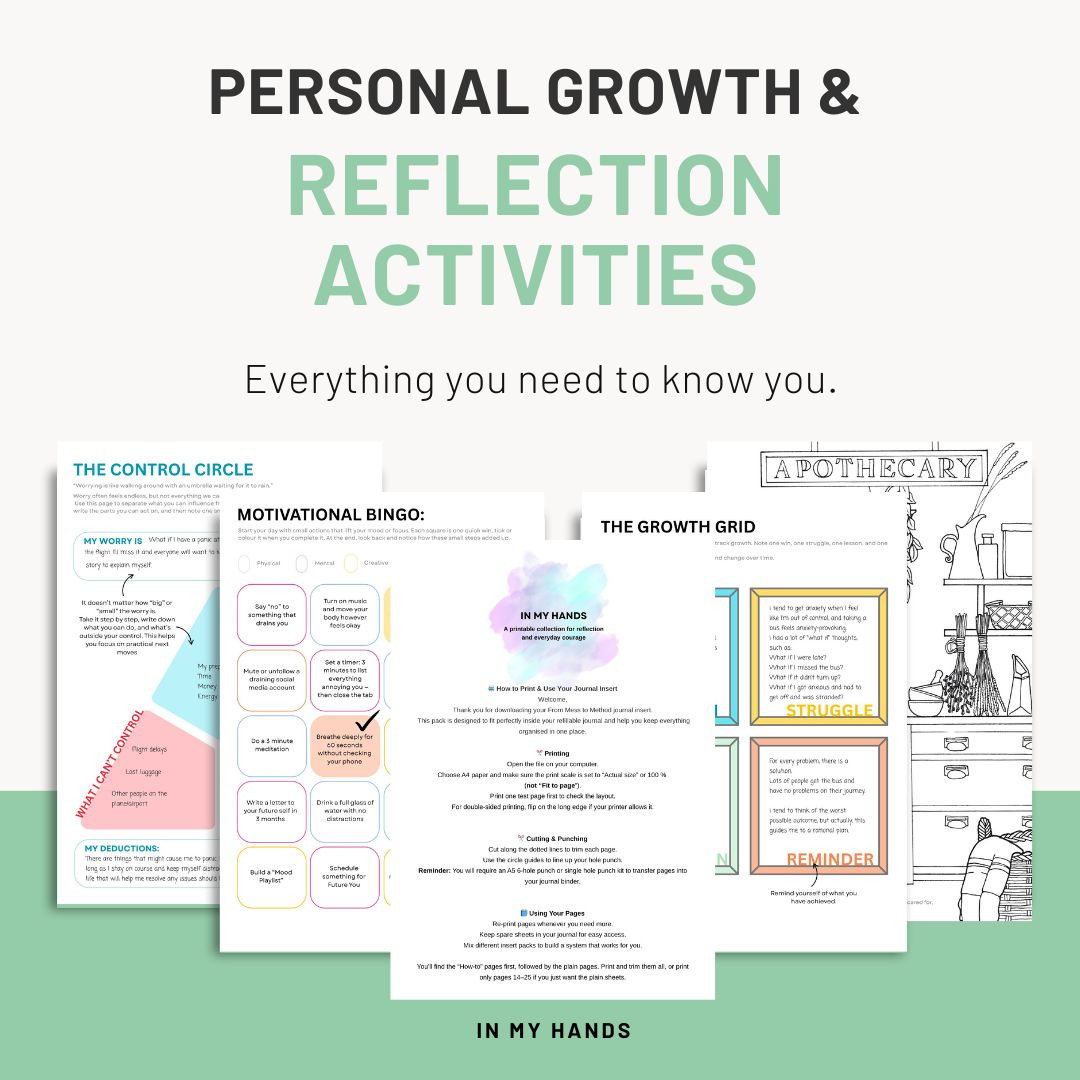

Discovery Journal is a healthy alternative to AI emotional dependence. This specialist journal is designed to guide people into deeper reflection, build lasting mental resilience and process emotions safely.

Discovery Journal is designed by real people who have felt the pain of anxiety disorders and other compounding mental health conditions. They even go as far as to create digital, printable expansions depending on particular needs, such as the reflective expansion, exploring sense of self and confidence.

Why Journaling Works Better Than Digital Comfort

Journaling does not try to fix you. It helps you understand yourself. It makes you independent:

It slows the mind

It reduces chaos

It allows emotional release

It brings clarity

It builds self-trust

It helps you recognise what matters

AI gives you instant reassurance. Journaling gives you real transformation.

The best part is that journaling helps you become more connected to your inner world rather than dependent on a digital voice.

Simple Prompts You Can Use Right Now

Here are a few journal prompts that help people step out of emotional autopilot:

What emotion is loudest today?

Where do I feel it in my body?

What triggered it?

What do I need that I am not giving myself?

What is one kind thing I can do for myself within the next twenty-four hours?

This kind of gentle reflection reduces emotional overwhelm far more effectively than fast digital answers.

Human Healing Will Always Need Humans

The rise of AI has changed our world in huge ways, but mental health still needs heart. Healing still requires patience, compassion, connection and self-awareness.

AI can support you. It can guide you. It can encourage you.

But it cannot replace the human journey of understanding yourself.

So if you are feeling overwhelmed, anxious or uncertain, do not rely solely on a screen for comfort. Use tools that bring you back to yourself. Tools that help you see your own strength. Tools that grow your ability to cope with life.

$39.99

The Disruptor Kit

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button

$4.99

Reflection Expansion Pack

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button

Comments